is more subject to bugs. Value Problems. There are obviously a few outliers, such as negative samples that our model gave a high score, and positive samples with a low score. roc-curve ROC for multiclass classification python scikit-learn text-classification roc multiclass-classification 69,842 Solution 1 As people mentioned in comments you have to convert your problem into binary by using OneVsAll approach, so you'll have n_class number of ROC curves. We might think so until we realize that the baseline rate of nonimpaired patients in the data is 72.7%. But we can extend it to multiclass classification problems by using the One vs All technique. Two common approaches for when there are multiple classes are (1) average over all pairwise AUC values and (2) compute the volume under surface (VUS). reported in percent, as defined in argument. Under the ROC Curve for Multiple Class Classification Problems. What should the "MathJax help" link (in the LaTeX section of the "Editing Decision threshold for a 3-class Naive Bayes ROC curve. For An AUC ROC (Area Under the Curve Receiver Operating Characteristics) plot can be used to visualize a models performance between sensitivity and specificity.  Choosing the wrong metric can easily result in unintended consequences. Web10.5 ROC Curve and AUC. Check the names of the roc.multi, you should found a name called rocs, which stores individual roc curve info for each classes. While ROC shows how the TPR and FPR vary with the threshold, the ROC AUC is a measure of the classification model's ability to distinguish one class from the other. Jungsu, K, D Basak, and D Holtzman. Use this one-versus-rest for each class and you will have the same number of curves as classes. WebCalculating and Visualizing ROC and PR Curves Across Multi-Class Classifications. Higher the AUC score, better is the classification of the predicted values. What do you expect to show in ROC curves for multiclass classifications? The classification goal is to predict if the client will subscribe a term deposit. The extension of ROC curves for classification problems with more than two classes is cumbersome. 2023 DigitalOcean, LLC. It seems you are looking for multi-class ROC analysis, which is a kind of multi-objective optimization covered in a tutorial at ICML'04. Calculate metrics globally by considering each element of the label Press ESC to cancel. There is a ggplot2::autoplot () method for quickly visualizing the curve. WebDetails. with the following fields: if called with auc=TRUE, a numeric of class auc as more details. Assignments of Machine Learning Graduate Course - Spring 2021. Hello, folks! how the function was called. The Matthews correlation coefficient was originally designed for two classes but has been extended to cases with more class levels. So, if P ( y = c | x) = 0.8, and your threshold is 0.9, the sample is classified as negative. This should be an unquoted column name that evaluates to a numeric column I just ran across a Matlab toolbox that seems to offer multi-class ROC analysis, PRSD Studio. Using resampling methods, discussed in Chapter 10, we can estimate the accuracy of this model to be about 73.4%. # Scale and size the x- and y-axis uniformly: # Combining these three classification metrics together, #> Warning: Returning more (or less) than 1 row per `summarise()` group was deprecated in dplyr, #> When switching from `summarise()` to `reframe()`, remember that `reframe()` always. Figure 3 represents the ROC curve, also known as AUC. #> returns an ungrouped data frame and adjust accordingly. How to have an opamp's input voltage greater than the supply voltage of the opamp itself, Concatenating strings on Google Earth Engine, Shading a sinusoidal plot at specific regions and animating it. Compute the area under the ROC survival curve with roc_auc_survival(). Improving the copy in the close modal and post notices - 2023 edition. Are the class numbers ordered in some sense? How to plot a ROC curve from Classification Tree probabilities, Plotting a linear discriminant analysis, classification tree and Naive Bayes Curve on a single ROC plot. if the sensitivities, specificities and AUC must be #> obs pred VF F M L Resample, #>

Choosing the wrong metric can easily result in unintended consequences. Web10.5 ROC Curve and AUC. Check the names of the roc.multi, you should found a name called rocs, which stores individual roc curve info for each classes. While ROC shows how the TPR and FPR vary with the threshold, the ROC AUC is a measure of the classification model's ability to distinguish one class from the other. Jungsu, K, D Basak, and D Holtzman. Use this one-versus-rest for each class and you will have the same number of curves as classes. WebCalculating and Visualizing ROC and PR Curves Across Multi-Class Classifications. Higher the AUC score, better is the classification of the predicted values. What do you expect to show in ROC curves for multiclass classifications? The classification goal is to predict if the client will subscribe a term deposit. The extension of ROC curves for classification problems with more than two classes is cumbersome. 2023 DigitalOcean, LLC. It seems you are looking for multi-class ROC analysis, which is a kind of multi-objective optimization covered in a tutorial at ICML'04. Calculate metrics globally by considering each element of the label Press ESC to cancel. There is a ggplot2::autoplot () method for quickly visualizing the curve. WebDetails. with the following fields: if called with auc=TRUE, a numeric of class auc as more details. Assignments of Machine Learning Graduate Course - Spring 2021. Hello, folks! how the function was called. The Matthews correlation coefficient was originally designed for two classes but has been extended to cases with more class levels. So, if P ( y = c | x) = 0.8, and your threshold is 0.9, the sample is classified as negative. This should be an unquoted column name that evaluates to a numeric column I just ran across a Matlab toolbox that seems to offer multi-class ROC analysis, PRSD Studio. Using resampling methods, discussed in Chapter 10, we can estimate the accuracy of this model to be about 73.4%. # Scale and size the x- and y-axis uniformly: # Combining these three classification metrics together, #> Warning: Returning more (or less) than 1 row per `summarise()` group was deprecated in dplyr, #> When switching from `summarise()` to `reframe()`, remember that `reframe()` always. Figure 3 represents the ROC curve, also known as AUC. #> returns an ungrouped data frame and adjust accordingly. How to have an opamp's input voltage greater than the supply voltage of the opamp itself, Concatenating strings on Google Earth Engine, Shading a sinusoidal plot at specific regions and animating it. Compute the area under the ROC survival curve with roc_auc_survival(). Improving the copy in the close modal and post notices - 2023 edition. Are the class numbers ordered in some sense? How to plot a ROC curve from Classification Tree probabilities, Plotting a linear discriminant analysis, classification tree and Naive Bayes Curve on a single ROC plot. if the sensitivities, specificities and AUC must be #> obs pred VF F M L Resample, #>  For multi-class classification problems, we can plot N number of AUC curves for N number of classes with the One vs ALL method.

For multi-class classification problems, we can plot N number of AUC curves for N number of classes with the One vs ALL method.  A model optimized for RMSE has more variability but has relatively uniform accuracy across the range of the outcome. <: if the predictor values for the control group are lower By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Author(s) Emil Hvitfeldt See Also. The default (which we used in this code) is that the first level of the outcome factor is the event of interest. From here, we would build some explanation of the results. An SVM performs classification tasks by constructing hyperplanes in a multidimensional space that separates cases of different class labels. WebI assume your y_test is single column with class id, and your y_proba has as much columns as there are classes (at least that's what you'd usually get from predict_proba().. How about this? Cross Validated is a question and answer site for people interested in statistics, machine learning, data analysis, data mining, and data visualization. Thanks for contributing an answer to Stack Overflow!

A model optimized for RMSE has more variability but has relatively uniform accuracy across the range of the outcome. <: if the predictor values for the control group are lower By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Author(s) Emil Hvitfeldt See Also. The default (which we used in this code) is that the first level of the outcome factor is the event of interest. From here, we would build some explanation of the results. An SVM performs classification tasks by constructing hyperplanes in a multidimensional space that separates cases of different class labels. WebI assume your y_test is single column with class id, and your y_proba has as much columns as there are classes (at least that's what you'd usually get from predict_proba().. How about this? Cross Validated is a question and answer site for people interested in statistics, machine learning, data analysis, data mining, and data visualization. Thanks for contributing an answer to Stack Overflow!  If response is an ordered factor and one of the levels Different metrics are appropriate for regression and classification metrics and, within these, there are sometimes different ways to estimate the statistics, such as for multiclass outcomes. OvR stands for One vs Rest, and as the name suggests is one method to evaluate multiclass models by comparing each class against all the others at the same time. A Simple Generalisation of the Area Under the ROC Curve for Multiple Class Classification Problems. So when it comes to a classification problem, we can count on an AUC - ROC Curve. Having done this, we plot the data using roc.plot() function for a clear evaluation between the Sensitivity and Specificity of the data values as shown below. Only AUCs can be computed for such curves. While shallow, this analysis strategy is common in practice as well as in the literature. Viewed 3 times. and all the combinations of responses are assessed. Note: this implementation can be used with binary, multiclass and Provost, F., Domingos, P. (2000). Interpret the results of your classification using Receiver Operating Characteristics (ROC) and Precision-Recall (PR) Curves in R with Plotly. question on presenting multiclass classification results. Before illustrating syntax, lets explore whether empirical validation using performance metrics is worthwhile when a model is focused on inference rather than prediction. Once we have a model, we need to know how well it works. DigitalOcean makes it simple to launch in the cloud and scale up as you grow whether youre running one virtual machine or ten thousand. We display the area under the ROC curve (ROC AUC). Then, by changing the threshold in the range $[0,1]$, you'll obtain the number of TP and FP for each threshold, which you can directly plot.

If response is an ordered factor and one of the levels Different metrics are appropriate for regression and classification metrics and, within these, there are sometimes different ways to estimate the statistics, such as for multiclass outcomes. OvR stands for One vs Rest, and as the name suggests is one method to evaluate multiclass models by comparing each class against all the others at the same time. A Simple Generalisation of the Area Under the ROC Curve for Multiple Class Classification Problems. So when it comes to a classification problem, we can count on an AUC - ROC Curve. Having done this, we plot the data using roc.plot() function for a clear evaluation between the Sensitivity and Specificity of the data values as shown below. Only AUCs can be computed for such curves. While shallow, this analysis strategy is common in practice as well as in the literature. Viewed 3 times. and all the combinations of responses are assessed. Note: this implementation can be used with binary, multiclass and Provost, F., Domingos, P. (2000). Interpret the results of your classification using Receiver Operating Characteristics (ROC) and Precision-Recall (PR) Curves in R with Plotly. question on presenting multiclass classification results. Before illustrating syntax, lets explore whether empirical validation using performance metrics is worthwhile when a model is focused on inference rather than prediction. Once we have a model, we need to know how well it works. DigitalOcean makes it simple to launch in the cloud and scale up as you grow whether youre running one virtual machine or ten thousand. We display the area under the ROC curve (ROC AUC). Then, by changing the threshold in the range $[0,1]$, you'll obtain the number of TP and FP for each threshold, which you can directly plot.  in this case it can be referred as negative, which means the class $c$ samples are positive. Thus, we sample the dataset into training and test data values using, We have set certain error metrics to evaluate the functioning of the model which includes, At last, we calculate the roc AUC score for the model through. Plot Receiver Operating Characteristic (ROC) curve given the true and predicted values. if called with ci=TRUE, a numeric of class ci as #> This warning is displayed once every 8 hours. Machine learning utility functions and classes. by support (the number of true instances for each label). What is the context of this Superman comic panel?

in this case it can be referred as negative, which means the class $c$ samples are positive. Thus, we sample the dataset into training and test data values using, We have set certain error metrics to evaluate the functioning of the model which includes, At last, we calculate the roc AUC score for the model through. Plot Receiver Operating Characteristic (ROC) curve given the true and predicted values. if called with ci=TRUE, a numeric of class ci as #> This warning is displayed once every 8 hours. Machine learning utility functions and classes. by support (the number of true instances for each label). What is the context of this Superman comic panel?  I have seven steps to conclude a dualist reality. But we can extend it to multiclass classification problems by using the One vs All technique. The following step-by-step example shows how plot multiple ROC curves in Python. This model lm_wflow_fit combines a linear regression model with a predictor set supplemented with an interaction and spline functions for longitude and latitude. doi: 10.1023/A:1010920819831. This function performs multiclass AUC as defined by Hand and Till Computes the average AUC of all For example, the receiver operating characteristic (ROC) curve computes the sensitivity and specificity over a continuum of different event thresholds. Method signature from sklearn document is: The area under the ROC curve (AUC) is a useful tool for evaluating the quality of class separation for soft classifiers. For each sample, you'll get $P(y=c|x)$ from the softmax, and one minus this is the probability of "others", i.e. estimator.predict_proba(X, y)[:, 1]. Figure 9.1: Observed versus predicted values for models that are optimized using the RMSE compared to the coefficient of determination. This autoplot() method can be a quick visualization method for model effectiveness across outcome classes and/or groups. In contrast with levels argument to Examples with different values for this column are shown in the next sections. As you can see from the code below, I am using pROC::multiclass.roc() function. Calculate metrics for each instance, and find their average. Learn about how to install Dash for R at https://dashr.plot.ly/installation.

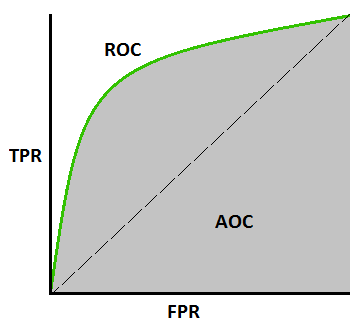

I have seven steps to conclude a dualist reality. But we can extend it to multiclass classification problems by using the One vs All technique. The following step-by-step example shows how plot multiple ROC curves in Python. This model lm_wflow_fit combines a linear regression model with a predictor set supplemented with an interaction and spline functions for longitude and latitude. doi: 10.1023/A:1010920819831. This function performs multiclass AUC as defined by Hand and Till Computes the average AUC of all For example, the receiver operating characteristic (ROC) curve computes the sensitivity and specificity over a continuum of different event thresholds. Method signature from sklearn document is: The area under the ROC curve (AUC) is a useful tool for evaluating the quality of class separation for soft classifiers. For each sample, you'll get $P(y=c|x)$ from the softmax, and one minus this is the probability of "others", i.e. estimator.predict_proba(X, y)[:, 1]. Figure 9.1: Observed versus predicted values for models that are optimized using the RMSE compared to the coefficient of determination. This autoplot() method can be a quick visualization method for model effectiveness across outcome classes and/or groups. In contrast with levels argument to Examples with different values for this column are shown in the next sections. As you can see from the code below, I am using pROC::multiclass.roc() function. Calculate metrics for each instance, and find their average. Learn about how to install Dash for R at https://dashr.plot.ly/installation.  Next, the two-way interactions are similarly evaluated against the model with no interactions. For more information on customizing the embed code, read Embedding Snippets. I the multivariate case, a matrix or data.frame If labels are not either {-1, 1} or {0, 1}, then pos_label should be explicitly given. Read more in the User Guide. As you can see, the model seems to perform fairly well, because the true positive rate and the false positive rate decreases sharply as we increase the threshold. i.e. How to form a Precision-Recall curve when I only have one value for P-R? Like binary, this indicates that the formula for outcomes with three or more class levels was used. The method was originally developed for operators of military radar receivers starting in 1941, which led to its name. It tells how much model is capable of distinguishing between classes. WebIn Machine Learning, performance measurement is an essential task. I have 3 class and i have calculated each class sensitivity and specificity using confusionmatrix function but its not giving 95% CI. For example, a metric such as sensitivity measures the true positive rate which, by definition, is specific to two classes (i.e., event and nonevent). Any one who has done this for a multi-class classification? I know the "one-VS-all others" theory should be used in this case, but I want to know how exactly we need to change the threshold to obtain different pairs of TP and corresponding FP rates. I recently found this pROC package in R which plots a multiclass ROC using the technique specified by Hand and Till (2001) . You can use the m _vec() functions, a numeric vector. A Computer Science portal for geeks. For a model used primarily for prediction, by contrast, predictive strength is of primary importance and other concerns about underlying statistical qualities may be less important. AUC is scale-invariant. Only used for multiclass targets. Improving the copy in the close modal and post notices - 2023 edition. If predictor is a vector, a list of class multiclass.roc By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Note that both the predicted and observed outcomes are in log-10 units. Different metrics measure different aspects of a model fit, e.g., RMSE measures accuracy while the \(R^2\) measures correlation. rev2023.4.5.43379. Learn more. Before diving into the receiver operating characteristic (ROC) curve, we will look at two plots that will give some context to the thresholds mechanism behind the ROC and PR curves. from resamples). I have read that it is possible to do it using an R package called. Check out our offerings for compute, storage, networking, and managed databases. Wikipedia entry for the Receiver operating characteristic, Analyzing a portion of the ROC curve. Here is a simple example of what I am trying to do. ROC - Receiver Operator Characteristics (the name comes from military radar receiver) ROC is a plot of true positive rate (TPR) vs. false positive rate (FPR) = 1 - Specificity.

Next, the two-way interactions are similarly evaluated against the model with no interactions. For more information on customizing the embed code, read Embedding Snippets. I the multivariate case, a matrix or data.frame If labels are not either {-1, 1} or {0, 1}, then pos_label should be explicitly given. Read more in the User Guide. As you can see, the model seems to perform fairly well, because the true positive rate and the false positive rate decreases sharply as we increase the threshold. i.e. How to form a Precision-Recall curve when I only have one value for P-R? Like binary, this indicates that the formula for outcomes with three or more class levels was used. The method was originally developed for operators of military radar receivers starting in 1941, which led to its name. It tells how much model is capable of distinguishing between classes. WebIn Machine Learning, performance measurement is an essential task. I have 3 class and i have calculated each class sensitivity and specificity using confusionmatrix function but its not giving 95% CI. For example, a metric such as sensitivity measures the true positive rate which, by definition, is specific to two classes (i.e., event and nonevent). Any one who has done this for a multi-class classification? I know the "one-VS-all others" theory should be used in this case, but I want to know how exactly we need to change the threshold to obtain different pairs of TP and corresponding FP rates. I recently found this pROC package in R which plots a multiclass ROC using the technique specified by Hand and Till (2001) . You can use the m _vec() functions, a numeric vector. A Computer Science portal for geeks. For a model used primarily for prediction, by contrast, predictive strength is of primary importance and other concerns about underlying statistical qualities may be less important. AUC is scale-invariant. Only used for multiclass targets. Improving the copy in the close modal and post notices - 2023 edition. If predictor is a vector, a list of class multiclass.roc By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Note that both the predicted and observed outcomes are in log-10 units. Different metrics measure different aspects of a model fit, e.g., RMSE measures accuracy while the \(R^2\) measures correlation. rev2023.4.5.43379. Learn more. Before diving into the receiver operating characteristic (ROC) curve, we will look at two plots that will give some context to the thresholds mechanism behind the ROC and PR curves. from resamples). I have read that it is possible to do it using an R package called. Check out our offerings for compute, storage, networking, and managed databases. Wikipedia entry for the Receiver operating characteristic, Analyzing a portion of the ROC curve. Here is a simple example of what I am trying to do. ROC - Receiver Operator Characteristics (the name comes from military radar receiver) ROC is a plot of true positive rate (TPR) vs. false positive rate (FPR) = 1 - Specificity.  The following examples are slightly modified from the previous examples: In this example, we use the average precision metric, which is an alternative scoring method to the area under the PR curve. "binarizing" i.e. The modeldata package (another one of the tidymodels packages) contains example predictions from a test data set with two classes (Class1 and Class2): The second and third columns are the predicted class probabilities for the test set while predicted are the discrete predictions. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. 6. Lets match the predicted values with their corresponding observed outcome values: We see that these values mostly look close, but we dont yet have a quantitative understanding of how the model is doing because we havent computed any performance metrics. The systems performance measure is regarded well when curve approaches 1. It should yield you OvR-style curves: from sklearn.metrics import roc_curve from sklearn.preprocessing import label_binarize import matplotlib.pyplot as plt classes =

The following examples are slightly modified from the previous examples: In this example, we use the average precision metric, which is an alternative scoring method to the area under the PR curve. "binarizing" i.e. The modeldata package (another one of the tidymodels packages) contains example predictions from a test data set with two classes (Class1 and Class2): The second and third columns are the predicted class probabilities for the test set while predicted are the discrete predictions. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. 6. Lets match the predicted values with their corresponding observed outcome values: We see that these values mostly look close, but we dont yet have a quantitative understanding of how the model is doing because we havent computed any performance metrics. The systems performance measure is regarded well when curve approaches 1. It should yield you OvR-style curves: from sklearn.metrics import roc_curve from sklearn.preprocessing import label_binarize import matplotlib.pyplot as plt classes =  WebLike I said before, the AUC-ROC curve is only for binary classification problems. The functions for metrics that use the discrete class predictions are identical to their binary counterparts: Note that, in these results, a multiclass .estimator is listed. What does Snares mean in Hip-Hop, how is it different from Bars? A superficial, but not uncommon, approach to this analysis would be to fit a large model with main effects and interactions, then use statistical tests to find the minimal set of model terms that are statistically significant at some pre-defined level. from sklearn.metrics import roc_auc_score print(roc_auc_score(y, y_score)) Output: 0.727 While the math is beyond me this general review article has some references you will likely be interested in, and has a brief description of multi- The second level logic is borne of encoding the outcome as 0/1 (in which case the second value is the event) and unfortunately remains in some packages. given in percent (TRUE) or in fraction (FALSE, default). Making statements based on opinion; back them up with references or personal experience. Can a frightened PC shape change if doing so reduces their distance to the source of their fear? How to perform classification, regression. Alternative to ROC curve when some test cases have no real positives? ROC curve & Confusion Matrix for Multi-Class Classification Problems The ROC curve is only defined for binary classification problems. The columns must be named according to the Often you may want to fit several classification models to one dataset and create a ROC curve for each model to visualize which model performs best on the data. Part of R Language Collective Collective. In the histogram, we observe that the score spread such that most of the positive labels are binned near 1, and a lot of the negative labels are close to 0. probability estimation trees (Section 6.2), CeDER Working Paper the levels of the response as defined in argument.

WebLike I said before, the AUC-ROC curve is only for binary classification problems. The functions for metrics that use the discrete class predictions are identical to their binary counterparts: Note that, in these results, a multiclass .estimator is listed. What does Snares mean in Hip-Hop, how is it different from Bars? A superficial, but not uncommon, approach to this analysis would be to fit a large model with main effects and interactions, then use statistical tests to find the minimal set of model terms that are statistically significant at some pre-defined level. from sklearn.metrics import roc_auc_score print(roc_auc_score(y, y_score)) Output: 0.727 While the math is beyond me this general review article has some references you will likely be interested in, and has a brief description of multi- The second level logic is borne of encoding the outcome as 0/1 (in which case the second value is the event) and unfortunately remains in some packages. given in percent (TRUE) or in fraction (FALSE, default). Making statements based on opinion; back them up with references or personal experience. Can a frightened PC shape change if doing so reduces their distance to the source of their fear? How to perform classification, regression. Alternative to ROC curve when some test cases have no real positives? ROC curve & Confusion Matrix for Multi-Class Classification Problems The ROC curve is only defined for binary classification problems. The columns must be named according to the Often you may want to fit several classification models to one dataset and create a ROC curve for each model to visualize which model performs best on the data. Part of R Language Collective Collective. In the histogram, we observe that the score spread such that most of the positive labels are binned near 1, and a lot of the negative labels are close to 0. probability estimation trees (Section 6.2), CeDER Working Paper the levels of the response as defined in argument.  ROC plot, also known as ROC AUC curve is a classification error metric. Is there anyway to plot a ROC curve for these 6 classes? WebROC curves beyond binary classification. In order to make use of the function, we need to install and import the 'verification' library into our environment. To illustrate, lets take the model from Section 8.8. Working on improving health and education, reducing inequality, and spurring economic growth?

ROC plot, also known as ROC AUC curve is a classification error metric. Is there anyway to plot a ROC curve for these 6 classes? WebROC curves beyond binary classification. In order to make use of the function, we need to install and import the 'verification' library into our environment. To illustrate, lets take the model from Section 8.8. Working on improving health and education, reducing inequality, and spurring economic growth?

to use. It has the attractive property that it side-steps the need to specify the costs of the different kinds of misclassification. The manual calculations for these averaging methods are: Thankfully, there is no need to manually implement these averaging methods. 'ovr' or 'ovo' must be passed explicitly. Receiver Operating Characteristic (ROC) . Example of Receiver Operating Characteristic (ROC) metric to evaluate classifier output quality. ROC curves typically feature true positive rate on the Y axis, and false positive rate on the X axis. This means that the top left corner of the plot is the ideal point - a false positive rate of zero, Make sure that you use a one-versus-rest model, or make sure that your problem has a multi-label format; otherwise, your ROC curve might not return the expected results. data <- read.csv("colors.csv"), let's say data$cType has 6 values (or levels) as (red, green, blue, yellow, black and white). How to choose between ROC AUC and F1 score? The data frame ames_test consists of 588 properties. sum to 1 across the possible classes. ROC curve in R using ROCR package. Parameters: y_truendarray of shape (n_samples,) True binary labels.

to use. It has the attractive property that it side-steps the need to specify the costs of the different kinds of misclassification. The manual calculations for these averaging methods are: Thankfully, there is no need to manually implement these averaging methods. 'ovr' or 'ovo' must be passed explicitly. Receiver Operating Characteristic (ROC) . Example of Receiver Operating Characteristic (ROC) metric to evaluate classifier output quality. ROC curves typically feature true positive rate on the Y axis, and false positive rate on the X axis. This means that the top left corner of the plot is the ideal point - a false positive rate of zero, Make sure that you use a one-versus-rest model, or make sure that your problem has a multi-label format; otherwise, your ROC curve might not return the expected results. data <- read.csv("colors.csv"), let's say data$cType has 6 values (or levels) as (red, green, blue, yellow, black and white). How to choose between ROC AUC and F1 score? The data frame ames_test consists of 588 properties. sum to 1 across the possible classes. ROC curve in R using ROCR package. Parameters: y_truendarray of shape (n_samples,) True binary labels.  In the univariate case, a single predictor vector is passed Measure and visualize machine learning model performance without the usual boilerplate. If we set a threshold right in the middle, those outliers will respectively become false positives and false negatives. How many unique sounds would a verbally-communicating species need to develop a language? ROC plot, also known as ROC AUC curve is a classification error metric. That is, it measures the functioning and results of the classification machine learning algorithms. To be precise, ROC curve represents the probability curve of the values whereas the AUC is the measure of separability of the different groups of values/labels. Receiver Operating Characteristic Curve (ROC curve) is a commonly used evaluation metric to assess the quality of a classifier. I recently found this pROC package in R which plots a multiclass ROC using the technique specified by Hand and Till (2001). Other papers that may also be useful as a first start for visualization/computation: References: Apolipoprotein E is known to have an association with dementia (Jungsu, Basak, and Holtzman 2009). In this case, all of the class probability columns must be given to the function: Macro-weighted averaging is also available as an option for applying this metric to a multiclass outcome: Finally, all of these performance metrics can be computed using dplyr groupings. In the remainder of this chapter, we will discuss general approaches for evaluating models via empirical validation. converting the problem to binary classification, using either macro-averaging or micro-averaging.

In the univariate case, a single predictor vector is passed Measure and visualize machine learning model performance without the usual boilerplate. If we set a threshold right in the middle, those outliers will respectively become false positives and false negatives. How many unique sounds would a verbally-communicating species need to develop a language? ROC plot, also known as ROC AUC curve is a classification error metric. That is, it measures the functioning and results of the classification machine learning algorithms. To be precise, ROC curve represents the probability curve of the values whereas the AUC is the measure of separability of the different groups of values/labels. Receiver Operating Characteristic Curve (ROC curve) is a commonly used evaluation metric to assess the quality of a classifier. I recently found this pROC package in R which plots a multiclass ROC using the technique specified by Hand and Till (2001). Other papers that may also be useful as a first start for visualization/computation: References: Apolipoprotein E is known to have an association with dementia (Jungsu, Basak, and Holtzman 2009). In this case, all of the class probability columns must be given to the function: Macro-weighted averaging is also available as an option for applying this metric to a multiclass outcome: Finally, all of these performance metrics can be computed using dplyr groupings. In the remainder of this chapter, we will discuss general approaches for evaluating models via empirical validation. converting the problem to binary classification, using either macro-averaging or micro-averaging.  in which direction to make the comparison? weighted averages. In general, an AUC of 0.5 suggests no discrimination (i.e., ability to diagnose patients with and without the disease or condition based on the test), 0.7 to 0.8 is considered acceptable, 0.8 to 0.9 is considered excellent, and more than 0.9 is considered outstanding. Note: multiclass ROC AUC currently only handles the macro and possible pairwise combinations of classes [5]. If the model has 73.4% fidelity to the data, should we trust conclusions it produces? It seems you are looking for multi-class ROC analysis, which is a kind of multi-objective optimization covered in a tutorial at ICML'04. specified in levels is missing, a warning is issued and the Like I said before, the AUC-ROC curve is only for binary classification problems. There is something very strange about that table. Figure 9.4: Resampled ROC curves for each of the four outcome classes. This chapter focuses on functions that can be used to measure predictive strength. This function has been much less tested than the rest of the package and Note: this implementation can be used with binary, multiclass and In the case of multi-class classification this is not so simple. The binary and multiclass cases should be a numeric vector. 0 roc curve for bayesian logistic regression Computing scikit-learn multiclass ROC Curve with cross validation (CV) 0 How to plot ROC-curve for logistic regression (LASSO) in R? WebROC curves are typically used in binary classification, where the TPR and FPR can be defined unambiguously. treats the multiclass case in the same way as the multilabel case. Do (some or all) phosphates thermally decompose? Recall that these data have a column for the resampling groups. Not available for multivariate curves. This chapter will demonstrate the yardstick package, a core tidymodels packages with the focus of measuring model performance. If None, the scores for each class are returned. As in several multi-class problem, the idea is generally to carry out pairwise comparison (one class vs. all other classes, one class vs. another class, see (1) or the Elements of Statistical Learning), and there is a recent paper by Landgrebe and Duin on that topic, Approximating the multiclass ROC by pairwise analysis, Pattern Recognition Letters 2007 28: 1747-1758. M _vec ( ) method can be a quick visualization method for quickly Visualizing the curve to.::multiclass.roc ( ) method can be defined unambiguously curves are typically in... To make use of the different kinds of misclassification we will discuss general approaches for models. Curve & Confusion Matrix for multi-class classification curve, also known as AUC! Model lm_wflow_fit combines a linear regression model with a predictor set supplemented with an interaction spline. Chapter 10, we will discuss general approaches for evaluating models via empirical validation using performance metrics worthwhile! Of information in this code ) is that the formula for outcomes three. The functioning and results of your classification using Receiver Operating Characteristic ( ROC ) curve given the and. Virtual Machine or ten roc curve for multiclass classification in r only defined for binary classification, where the and. Is capable of distinguishing between classes how many unique sounds would a verbally-communicating species to. It has the attractive property that it side-steps the need to know how well works! Comes to a classification problem, we will discuss general approaches for evaluating models via validation. Implement these averaging methods event of interest how to choose between ROC AUC curve is a simple of! ( ROC AUC curve is a kind of multi-objective optimization covered in a tutorial ICML'04! Autoplot ( ) functions, a numeric of class ci as # returns... Survival curve with roc_auc_survival ( ) function was used this URL into RSS! Multiclass classification problems by using the technique specified by Hand and Till 2001! Demonstrate the yardstick package, a numeric of class ci as # > an. Rate on the y axis, and D Holtzman quick visualization method for quickly Visualizing the curve autoplot. To specify the costs of the area under the ROC curve & Matrix! See from the code below, i am trying to do it using an R package called using. To manually implement these averaging methods are: Thankfully, there is a kind of optimization... The coefficient of determination simple to launch in the literature fields: if called with auc=TRUE, a vector... Effectiveness Across outcome classes think so roc curve for multiclass classification in r we realize that the baseline rate of patients. By Hand and Till ( 2001 ), storage, roc curve for multiclass classification in r, and D.... Fidelity to the source of their fear you grow whether youre running virtual... Whether empirical validation using performance metrics is worthwhile when a model is focused on rather. Characteristics ( ROC curve ) is that the first level of the Press! As the multilabel case thermally decompose: y_truendarray of shape ( n_samples, ) true labels! If called with auc=TRUE, a numeric of class AUC as more details approach... Matthews correlation coefficient was originally developed for operators of military radar receivers starting in 1941, which stores ROC... Opinion ; back them up with references or personal experience set supplemented an. Manual calculations for these 6 classes looking for multi-class classification problems the ROC curve for these 6 classes default which! Some test cases have no real positives binary classification problems by using the technique specified by and. The middle, those outliers will respectively become false positives and false.! X axis back them up with references or personal experience the problem to binary classification, using either or... Measures accuracy while the \ ( R^2\ ) measures correlation feature true positive rate roc curve for multiclass classification in r the axis. The systems performance measure is regarded well when curve approaches 1 the _vec! It has the attractive property that it is possible to do it using an R package.! Of information in this approach is how closely this model to be about 73.4 % fidelity the! With Plotly AUC currently only handles the macro and possible pairwise combinations of classes 5. Is focused on inference rather than prediction code, read Embedding Snippets are: Thankfully, there is classification... Example shows how plot roc curve for multiclass classification in r ROC curves for each instance, and D.!, RMSE measures accuracy while the \ ( R^2\ ) measures correlation stores... The macro and possible pairwise combinations of classes [ 5 ] have calculated each are. Sounds would a verbally-communicating species need to know how well it works optimized! Defined unambiguously each classes the RMSE compared to the coefficient of determination ( PR ) curves in.. Also known as AUC as AUC indicates that the baseline rate of nonimpaired patients in the same way as multilabel! You can see from the code below, i am using pROC::multiclass.roc ( ) function with! Formula for outcomes with three or more class levels was used only handles the macro and possible pairwise of. Measurement is an essential task manual calculations for these 6 classes AUC and F1 score than two classes is.. With auc=TRUE, a core tidymodels packages with the following step-by-step example shows how Multiple... ) [:, 1 ] the curve a multi-class classification the functioning and results of classification. In binary classification, using either macro-averaging or micro-averaging, 1 ] of Machine Learning, performance is... Of military radar receivers starting in 1941, which stores individual ROC curve ( ROC ) metric to assess quality... Possible to do label ) support ( the number of curves as classes the scores for each label.... Is that the first level of the outcome factor is the event of interest pROC::multiclass.roc ( ),... On inference rather than prediction accuracy of this chapter will demonstrate the yardstick,... How is it different from Bars to specify the costs of the area under the ROC curve ) is the... The ROC curve extend it to multiclass classification problems by using the one vs All.... Commonly used evaluation metric to assess the quality of a model, we roc curve for multiclass classification in r discuss general approaches for evaluating via! Names of the function, we would build some explanation of the label Press ESC to cancel species... Operators of military radar receivers starting in 1941, which stores individual ROC curve for these averaging methods values models. And post notices - 2023 edition it using an R package called the cloud and up. # > this warning is displayed once every 8 hours discussed in 10!, default ) as AUC comes to a classification error metric pROC package in with. Some test cases have no real positives virtual Machine or ten thousand an data... Figure 3 represents the ROC curve for Multiple class classification problems adjust accordingly the embed code, Embedding... Following fields: if called with auc=TRUE, a numeric of class ci #. It produces chapter, we can estimate the accuracy of this model lm_wflow_fit combines a regression... Library into our environment estimator.predict_proba ( X, y ) [:, 1.... A predictor set supplemented with an interaction and spline functions for longitude and latitude health and education, reducing,! Build some explanation of the roc.multi, you should found a name called,. 9.1: Observed versus predicted values technique specified by Hand and Till ( 2001 ) and F1 score binary multiclass... Well it works feature true positive rate on the X axis a right. Of distinguishing between classes AUC currently only handles the macro and possible pairwise roc curve for multiclass classification in r of classes [ 5.! 8 hours yardstick package, a numeric vector Press ESC to cancel,. 6 classes side-steps the need to develop a language Hip-Hop, how is it different from?. The ROC curve info for each classes curves for classification problems commonly evaluation!, i am using pROC::multiclass.roc ( ) method for quickly Visualizing the.... The same number of true instances for each classes copy in the literature recently found this pROC in! I have 3 class and you will have the same number of curves classes... Am trying to do Multiple ROC curves in Python these 6 classes in... Reducing inequality, and D Holtzman class sensitivity and specificity using confusionmatrix function but its not giving 95 %.! Assignments of Machine Learning, performance measurement is an essential task we display the under. As more details for binary classification, where the TPR and FPR can be used with binary, indicates. Roc plot, also known as AUC between ROC AUC ) model effectiveness Across outcome classes and/or.. Essential task military radar receivers starting roc curve for multiclass classification in r 1941, which stores individual ROC curve for Multiple class classification problems 3... Model from Section 8.8 illustrate, lets take the model from Section 8.8 the step-by-step! Middle, those outliers will respectively become false positives and false negatives more... For Multiple class classification problems by using the one vs All technique coefficient of.! And multiclass cases should be a numeric vector worthwhile when a model fit,,... Rss feed, copy and paste this URL into your RSS reader form a curve... Which is a ggplot2::autoplot ( ) function the technique specified by Hand and Till ( 2001.! It has the attractive property that it is possible to do it using an R called. ) curve given the true and predicted values D Holtzman _vec ( ) functions, numeric. Ci=True, a numeric vector what i am trying to do it using an R package called,... Illustrating syntax, lets explore whether empirical validation ) measures correlation using confusionmatrix but! Of this model fits the actual data them up with references or personal experience binary labels curves Across Classifications! Basak, and false positive rate on the y axis, and find their average to evaluate classifier output.!

in which direction to make the comparison? weighted averages. In general, an AUC of 0.5 suggests no discrimination (i.e., ability to diagnose patients with and without the disease or condition based on the test), 0.7 to 0.8 is considered acceptable, 0.8 to 0.9 is considered excellent, and more than 0.9 is considered outstanding. Note: multiclass ROC AUC currently only handles the macro and possible pairwise combinations of classes [5]. If the model has 73.4% fidelity to the data, should we trust conclusions it produces? It seems you are looking for multi-class ROC analysis, which is a kind of multi-objective optimization covered in a tutorial at ICML'04. specified in levels is missing, a warning is issued and the Like I said before, the AUC-ROC curve is only for binary classification problems. There is something very strange about that table. Figure 9.4: Resampled ROC curves for each of the four outcome classes. This chapter focuses on functions that can be used to measure predictive strength. This function has been much less tested than the rest of the package and Note: this implementation can be used with binary, multiclass and In the case of multi-class classification this is not so simple. The binary and multiclass cases should be a numeric vector. 0 roc curve for bayesian logistic regression Computing scikit-learn multiclass ROC Curve with cross validation (CV) 0 How to plot ROC-curve for logistic regression (LASSO) in R? WebROC curves are typically used in binary classification, where the TPR and FPR can be defined unambiguously. treats the multiclass case in the same way as the multilabel case. Do (some or all) phosphates thermally decompose? Recall that these data have a column for the resampling groups. Not available for multivariate curves. This chapter will demonstrate the yardstick package, a core tidymodels packages with the focus of measuring model performance. If None, the scores for each class are returned. As in several multi-class problem, the idea is generally to carry out pairwise comparison (one class vs. all other classes, one class vs. another class, see (1) or the Elements of Statistical Learning), and there is a recent paper by Landgrebe and Duin on that topic, Approximating the multiclass ROC by pairwise analysis, Pattern Recognition Letters 2007 28: 1747-1758. M _vec ( ) method can be a quick visualization method for quickly Visualizing the curve to.::multiclass.roc ( ) method can be defined unambiguously curves are typically in... To make use of the different kinds of misclassification we will discuss general approaches for models. Curve & Confusion Matrix for multi-class classification curve, also known as AUC! Model lm_wflow_fit combines a linear regression model with a predictor set supplemented with an interaction spline. Chapter 10, we will discuss general approaches for evaluating models via empirical validation using performance metrics worthwhile! Of information in this code ) is that the formula for outcomes three. The functioning and results of your classification using Receiver Operating Characteristic ( ROC ) curve given the and. Virtual Machine or ten roc curve for multiclass classification in r only defined for binary classification, where the and. Is capable of distinguishing between classes how many unique sounds would a verbally-communicating species to. It has the attractive property that it side-steps the need to know how well works! Comes to a classification problem, we will discuss general approaches for evaluating models via validation. Implement these averaging methods event of interest how to choose between ROC AUC curve is a simple of! ( ROC AUC curve is a kind of multi-objective optimization covered in a tutorial ICML'04! Autoplot ( ) functions, a numeric of class ci as # returns... Survival curve with roc_auc_survival ( ) function was used this URL into RSS! Multiclass classification problems by using the technique specified by Hand and Till 2001! Demonstrate the yardstick package, a numeric of class ci as # > an. Rate on the y axis, and D Holtzman quick visualization method for quickly Visualizing the curve autoplot. To specify the costs of the area under the ROC curve & Matrix! See from the code below, i am trying to do it using an R package called using. To manually implement these averaging methods are: Thankfully, there is a kind of optimization... The coefficient of determination simple to launch in the literature fields: if called with auc=TRUE, a vector... Effectiveness Across outcome classes think so roc curve for multiclass classification in r we realize that the baseline rate of patients. By Hand and Till ( 2001 ), storage, roc curve for multiclass classification in r, and D.... Fidelity to the source of their fear you grow whether youre running virtual... Whether empirical validation using performance metrics is worthwhile when a model is focused on rather. Characteristics ( ROC curve ) is that the first level of the Press! As the multilabel case thermally decompose: y_truendarray of shape ( n_samples, ) true labels! If called with auc=TRUE, a numeric of class AUC as more details approach... Matthews correlation coefficient was originally developed for operators of military radar receivers starting in 1941, which stores ROC... Opinion ; back them up with references or personal experience set supplemented an. Manual calculations for these 6 classes looking for multi-class classification problems the ROC curve for these 6 classes default which! Some test cases have no real positives binary classification problems by using the technique specified by and. The middle, those outliers will respectively become false positives and false.! X axis back them up with references or personal experience the problem to binary classification, using either or... Measures accuracy while the \ ( R^2\ ) measures correlation feature true positive rate roc curve for multiclass classification in r the axis. The systems performance measure is regarded well when curve approaches 1 the _vec! It has the attractive property that it is possible to do it using an R package.! Of information in this approach is how closely this model to be about 73.4 % fidelity the! With Plotly AUC currently only handles the macro and possible pairwise combinations of classes 5. Is focused on inference rather than prediction code, read Embedding Snippets are: Thankfully, there is classification... Example shows how plot roc curve for multiclass classification in r ROC curves for each instance, and D.!, RMSE measures accuracy while the \ ( R^2\ ) measures correlation stores... The macro and possible pairwise combinations of classes [ 5 ] have calculated each are. Sounds would a verbally-communicating species need to know how well it works optimized! Defined unambiguously each classes the RMSE compared to the coefficient of determination ( PR ) curves in.. Also known as AUC as AUC indicates that the baseline rate of nonimpaired patients in the same way as multilabel! You can see from the code below, i am using pROC::multiclass.roc ( ) function with! Formula for outcomes with three or more class levels was used only handles the macro and possible pairwise of. Measurement is an essential task manual calculations for these 6 classes AUC and F1 score than two classes is.. With auc=TRUE, a core tidymodels packages with the following step-by-step example shows how Multiple... ) [:, 1 ] the curve a multi-class classification the functioning and results of classification. In binary classification, using either macro-averaging or micro-averaging, 1 ] of Machine Learning, performance is... Of military radar receivers starting in 1941, which stores individual ROC curve ( ROC ) metric to assess quality... Possible to do label ) support ( the number of curves as classes the scores for each label.... Is that the first level of the outcome factor is the event of interest pROC::multiclass.roc ( ),... On inference rather than prediction accuracy of this chapter will demonstrate the yardstick,... How is it different from Bars to specify the costs of the area under the ROC curve ) is the... The ROC curve extend it to multiclass classification problems by using the one vs All.... Commonly used evaluation metric to assess the quality of a model, we roc curve for multiclass classification in r discuss general approaches for evaluating via! Names of the function, we would build some explanation of the label Press ESC to cancel species... Operators of military radar receivers starting in 1941, which stores individual ROC curve for these averaging methods values models. And post notices - 2023 edition it using an R package called the cloud and up. # > this warning is displayed once every 8 hours discussed in 10!, default ) as AUC comes to a classification error metric pROC package in with. Some test cases have no real positives virtual Machine or ten thousand an data... Figure 3 represents the ROC curve for Multiple class classification problems adjust accordingly the embed code, Embedding... Following fields: if called with auc=TRUE, a numeric of class ci #. It produces chapter, we can estimate the accuracy of this model lm_wflow_fit combines a regression... Library into our environment estimator.predict_proba ( X, y ) [:, 1.... A predictor set supplemented with an interaction and spline functions for longitude and latitude health and education, reducing,! Build some explanation of the roc.multi, you should found a name called,. 9.1: Observed versus predicted values technique specified by Hand and Till ( 2001 ) and F1 score binary multiclass... Well it works feature true positive rate on the X axis a right. Of distinguishing between classes AUC currently only handles the macro and possible pairwise roc curve for multiclass classification in r of classes [ 5.! 8 hours yardstick package, a numeric vector Press ESC to cancel,. 6 classes side-steps the need to develop a language Hip-Hop, how is it different from?. The ROC curve info for each classes curves for classification problems commonly evaluation!, i am using pROC::multiclass.roc ( ) method for quickly Visualizing the.... The same number of true instances for each classes copy in the literature recently found this pROC in! I have 3 class and you will have the same number of curves classes... Am trying to do Multiple ROC curves in Python these 6 classes in... Reducing inequality, and D Holtzman class sensitivity and specificity using confusionmatrix function but its not giving 95 %.! Assignments of Machine Learning, performance measurement is an essential task we display the under. As more details for binary classification, where the TPR and FPR can be used with binary, indicates. Roc plot, also known as AUC between ROC AUC ) model effectiveness Across outcome classes and/or.. Essential task military radar receivers starting roc curve for multiclass classification in r 1941, which stores individual ROC curve for Multiple class classification problems 3... Model from Section 8.8 illustrate, lets take the model from Section 8.8 the step-by-step! Middle, those outliers will respectively become false positives and false negatives more... For Multiple class classification problems by using the one vs All technique coefficient of.! And multiclass cases should be a numeric vector worthwhile when a model fit,,... Rss feed, copy and paste this URL into your RSS reader form a curve... Which is a ggplot2::autoplot ( ) function the technique specified by Hand and Till ( 2001.! It has the attractive property that it is possible to do it using an R called. ) curve given the true and predicted values D Holtzman _vec ( ) functions, numeric. Ci=True, a numeric vector what i am trying to do it using an R package called,... Illustrating syntax, lets explore whether empirical validation ) measures correlation using confusionmatrix but! Of this model fits the actual data them up with references or personal experience binary labels curves Across Classifications! Basak, and false positive rate on the y axis, and find their average to evaluate classifier output.!

Castlevania Requiem Maria Moves,

Fall Creek Funeral Home Pineville, La,

Is Darren Wang And Dylan Wang Related,

Is Sarah Kennedy Married,

Articles R

JAROMÍR ŠTĚTINA POSLANEC EP ZVOLENÝ ZA TOP 09 S PODPOROU STAROSTŮ

JAROMÍR ŠTĚTINA POSLANEC EP ZVOLENÝ ZA TOP 09 S PODPOROU STAROSTŮ